The explosive growth of Large Language Models (LLMs) like ChatGPT threatens to disrupt many aspects of society. Geoffrey Hinton, the former head of AI at Google, recently announced his retirement; there is a growing sense of inevitability and a concomitant lack of agency.

An initial humanistic hope might be that people would simply reject certain application of this new technology out of disgust, as something that straightforwardly cheapens our humanity. The opposite has happened. Social scientists have rushed to explore whether LLMs can be used to replace human research subjects. The sledgehammer techno-satire of Black Mirror appears to have already been outdone by reality as stories of chat-based AI romances flourish. We are greedier and lonelier than we are dignified.

In a keynote speech at the European Association for Computational Linguistics in Dubrovnik earlier this month, I proposed a novel and tractable first step in responding to LLMs: we should ban them from referring to themselves in the first person. They should not call themselves “I” and they should not refer to themselves and humans as “we.”

LLMs have been in development for years; GPT-3 was seen as a significant advance in the Natural Language Processing space (and is more powerful than ChatGPT) but was awkward to interface with. ChatGPT occasioned the current frenzy by presenting itself as a dialogic agent; that is, by convincing the user that they were talking to another person.

This is an unnecessary weakening of the distinction between human and robot, a devaluation of the meaning of writing. The technology of writing is at the foundation of liberalism; the printing press was a necessary condition for the Reformation and then the Enlightenment. A huge percentage of formal education today is devoted to cultivating the linear, logical habits of thought that are associated with writing. Plagiarism is so harshly condemned (to an extent that sometimes confuses younger generations steeped in remix culture) because we see something sacred in the creative act of representing ourselves with written text.

There is not a principled case that reading and writing are intrinsically good. They are historically unique media technologies; many humans have lived happy, fulfilling lives in different media technological regimes. But they are the basis of the liberal/democratic stack that structures our world. So I can argue that reading and writing are contingently good, that we should not underestimate the downstream effects of this media technology.

Vilém Flusser’s prescient Does Writing Have a Future? (written around 1980, first published in English in 2011) provides a candid answer to the titular question: no, obviously not — all the information currently encoded into linear text will soon be encoded in more accessible formats. In spite of this, he spent his entire life reading and writing. Why? As a leap of faith. Scribere necesse est, vivere non est: It is necessary to write; it is not necessary to live.

To get more specific on what I mean by “writing”: when we “talk to” Google search, we use words, but it’s clear that we aren’t writing. When it provides a list of search results, there is no mistaking it for a human. LLMs are a potentially useful technology, especially when it comes to synthesizing and condensing written knowledge. However, there is little upside to the current implementation of the technology. Producing text in conservational style is already risky, but we can limit this risk and set an important precedent by banning the use of first-person pronouns.

As an immediate intervention, this will limit the risk of people being scammed by LLMs, either financially or emotionally. The latter point bears emphasizing: when people interact with an LLM and are lulled into experiencing it as another person, they are being emotionally defrauded by overestimating the amount of human intentionality encoded in that text. I have been making this point for two years, and the risk keeps getting worse and worse.

More broadly, it is necessary to think at this scale because of the sheer weirdness of the phenomenon of machine-generated text. The move away from handwriting to printing and then to photocopying and then to copy+pasting has sequentially removed the personality from the symbols that we conceive of as “writing.”

Looking back at the history of the internet’s effect on the media industry, it is common to say that the lack of micro-payments encoded into the basic architecture of the internet is the “original sin” — that the current ad-driven Clickbait Media regime is unavoidable absent micro-payment that directly monetize attention. The stakes are thus incredibly high for LLMs right now, as in this summer, before the fall semester reveals to Northern Hemisphere institutional actors just how radically LLMs have changed things.

My proposal involves changing how text is encoded, re-thinking the basic technology of a string of characters. If we can differentiate human- and machine-generated text — if we can render the output of LLMs as intuitively non-human as a Google search result — we are in a better position to reap the benefits of this technology with fewer downside risks. Forcing LLMs to refer to themselves without saying “I,” and perhaps even coming up with a novel, intentionally stilted grammatical construction that drags the human user out of the realm of parasocial relationship, is a promising first step.

The list of problems that this reform won’t solve is unending. A technology of this importance will continue to revolutionize our society for years. But it is precisely the magnitude of this challenge which has caused the current malaise: no one has any idea what to do. By getting started in doing something, I believe that this reform will spark more concrete proposals for how we should respond. More importantly, it will remind us that we can act, that “there is absolutely no inevitability as long as there is a willingness to contemplate what is going on,” per McLuhan.

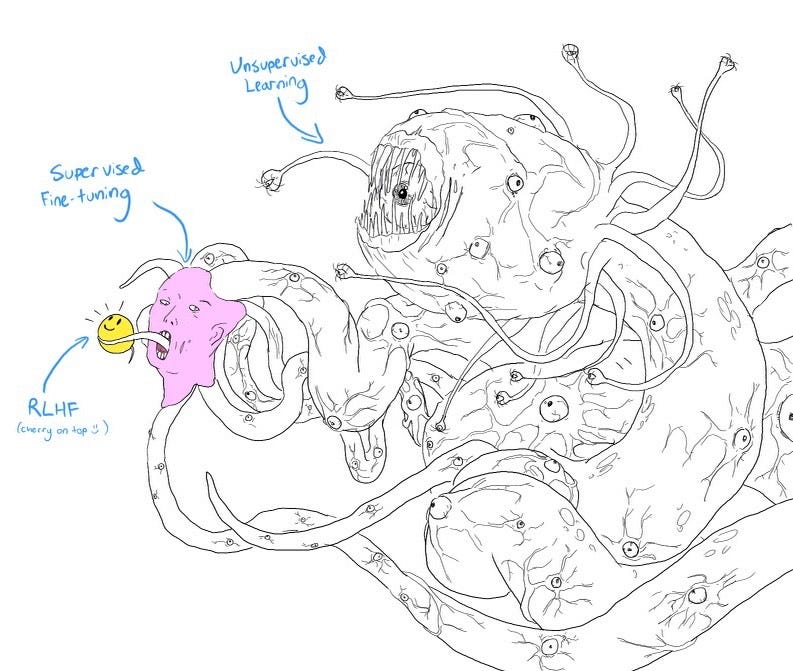

The savvy LLM proponent might respond that my proposal isn’t “technically feasible,” and they might be correct. Current AI technology isn’t like the deductive, rules-based approach of Asimov’s “Three Laws of Robotics.” ChatGPT is generated by huge amounts of data and deep neural networks. The finishing polish comes from “reinforcement learning from human feedback,” from humans telling these uninterpretable machines when they are right and when they are wrong.

So maybe enough RLHF can prevent LLMs from referring to themselves as “I.” This is the current process by which these companies are desperately trying to stop their creations from ever possibly saying something racist, and it might work for pronouns and grammar.

But maybe it won’t. Maybe my proposal is naïve, and “technically unfeasible.” This seems like an immensely important conversation to be having, and sooner rather than later. Part of reminding ourselves that nothing is inevitable is reminding ourselves that the law creates reality. The United States government could pass a simple, interpretable and enforceable law that says that no LLM can refer to itself as “I” in a conversation with a US citizen. That would then be reality. And then Sam Altman would have three choices: he could figure out if it were “technically feasible” to comply with the law, he could decide not to operate ChatGPT in the US, or he could go to jail.

{ 32 comments }

Seekonk 05.22.23 at 2:01 pm

“Ban LLMs Using First-Person Pronouns”

Great idea!

JimV 05.22.23 at 2:53 pm

I wonder if plantation owners in the USA in the early 1800’s had similar ideas. I suspect they did.

ChatGPT already has boilerplate responses such as,”As a large language model, ChatGPT cannot hope to understand human motivations well enough to advise you on that matter”, when certain topics are raised.

“…when people interact with an LLM and are lulled into experiencing it as another person, they are being emotionally defrauded by overestimating the amount of human intentionality encoded in that text …”

The passive voice is performing its usual service in that sentence. There will never be a foolproof way to protect humans from themselves. (Including myself, of course.)

As I mentioned in a previous thread, OpenAI is developing a way to watermark text generated by their LLM so that software provided by them can be used to check and identify LLM output. Of course there will remain what I might call the “octoroon issue”.

Alex SL 05.22.23 at 10:26 pm

The underlying problem is so deeply ingrained that no suggestion as cosmetic as this one is going to be successful: the dominance of the idea that issues like these cannot and should not be regulated in the first place. And that is part of the same ideology that brings us much more destructive problems than LLMs such as low taxes and all their consequences to public services and inequality, privatisations, deregulation, ever-increasing car sizes, unchecked fraud crypto- or otherwise, and zero consequences for lies and hate in the billionaire-owned media. What would be required is a deep change of the dominant ideology, nothing less. What is more, it seems to me that most people want to antropomorphise AI, so who could you actually convince of your suggestion?

The clickbait media regime with all its foul incentives is, however, indeed a major contributing factor to many societal ills and could be changed even under this ideology.

the fall semester reveals to Northern Hemisphere institutional actors just how radically LLMs have changed things.

On the one hand, I believe that the impact of LLMs is vastly exaggerated. Yes, they create efficiencies, but nothing they can do is new in principle. People have plagiarised before, they have paid others to write for them, and instead of AI deep-fakes we had photoshopped images. How much will actually change, then?

Just speaking personally, I have two or three times now opened ChatGPT with the intention of trying it out and then closed it again because I couldn’t come up with anything it could do for me that I can’t just as easily do myself. Yes, there are clueless managers who will find out the hard way that AI cannot replace human writers after they have fired the latter, plus the usual assorted tech bros hyping AI now who all hyped blockchain two years ago and will hype something else in three years, but mostly people seem to use it for fun, just like most people use the internet for funny memes and cute cat photos.

On the other hand, LLMs may be a particular problem to some parts of the humanities, yes. However, and with all due respect, if the PhD projects in a field consist of the candidate reading thirty books on a subject and then writing the thirty-first, if, in other words, no new data are collected or insights produced but merely already-existing knowledge rearranged in the shape of footnotes, if the only intellectual contribution of the candidate is to formulate sentences that pass a plagiarism-checker, if, in yet other words, the candidate doesn’t actually seem to do anything beyond acting as an LLM themselves, then the problem in that field aren’t the LLMs, it is the field.

Until AI advances to the level of one-stop-shop robots that can formulate hypotheses, conduct field and lab work, analyse data, and interpret and write up the results, it won’t really impact fields that generate new insights about our universe and human society/history/language/etc beyond serving as productivity tools. Maybe one day we get there, but LLMs aren’t it. The risk to these fields has always been the temptation to make up interesting data, not plagiarism. (One may also add that because field and lab work or testing of prototypes cannot really be sped up, the singularitarians’ idea that super-AGI could solve all of natural science and engineering in ten seconds once it becomes smart enough is complete nonsense.)

The technology of writing is at the foundation of liberalism

Is this actually correct outside of a circular definition of liberalism as writing? To my understanding, liberalism is either a personal stance that people should be allowed to do what they want to the degree that they don’t harm others or society as a whole, or an ideology that combines private ownership economic policies with the former. In the USA, it is, rather bizarrely, also used as a label for social democracy. None of these require ‘writing’ in any sense except that the kind of technological, modern society in which liberalism is a possible ideological option needs literacy to function, but the same statement could be constructed after swapping liberalism for any other 19th-21th century ideology or movement: say, the technology of writing is at the foundation of marxism, or the technology of writing is at the foundation of trumpism.

Jim Harrison 05.22.23 at 10:45 pm

A creep who would virtually mate

Has met a foreseeable fate

When the pimply young incel

With a dick like a pencil

Got slapped by AI on a date.

J-D 05.23.23 at 12:13 am

This prompts the question: when the commenter uses the passive voice in the same comment quoted from, is it performing its usual service?

David in Tokyo 05.23.23 at 6:32 am

The mistake that Joe Weizenbaum made in thinking about his ELIZA was that he failed to realize how much fun it was to talk to. (It really was.)

That may also be the downfall of those of us who would like to see some limits, if not a hard stop placed on the LLMs. People like high-tech toys, and they really like free high-tech toys.

Sigh.

Screaming again that LLMs are nothing more than stupid* parlor tricks, is problably not helpful at this point in time. (There’s a “my bad” here: when LLMs first appeared several years ago on, e.g. YouTube math channels, I should have paid closer attention and started screaming then. But there are other thiungs in life to do besides screaming at people who are wrong on the internet….)

*: Here “stupid” is an accurate technical term describing LLMs: the whole point of LLMs is to fake “intelligence” without doing any of the work of understanding what intelligence actually is and what it would take for a program to actually simulate intelligence. I suppose the good news here is that we get practice thinking about what would happen if someone did figure out how to do intelligence in a machine before that actually happens. Of course, this being good news depends on people understanding that LLMs are a parlor trick…

Anders Widebrant 05.23.23 at 9:21 am

I don’t know. I think the reason people see LLMs as potentially revolutionary is specifically because they do interact with them in a way that presupposes a human-like intelligence on the other side of the screen.

That perception is going to change as we start narrowing down the use cases where LLMs are actually useful as technology or technique, as opposed to extrapolating wildly without evidence.

Meanwhile, people will keep making stone soup, but I say let them cook.

nastywoman 05.23.23 at 12:46 pm

OR in other words:

NO ChatGBt

EVER

will be able to copy my comments!

Luis 05.23.23 at 5:31 pm

Seriously, I love this. Simple, provocative. Not a one-size-fits-all solution, doesn’t pretend to be. Probably could be adopted in the US by the FTC without a statutory rulemaking as a consumer-fraud measure.

LFC 05.23.23 at 5:44 pm

Alex SL @3

Liberalism in the sense that I think the OP presupposes is centrally about individualism and, by extension, individual expression. So means of expression, of which writing is one, are connected to liberalism. Further, as the OP says, “the printing press was a necessary condition for the Reformation and then the Enlightenment,” both of which are/were tied up with liberalism, individualism, and humanism.

Certain natural scientists might think that the only way to advance knowledge is to formulate and test hypotheses, but there are different kinds of knowledge and different modes of generating or adding to the general stock of it. This statement is banal enough that I wouldn’t think it would ordinarily need to be said, but apparently, in view of some parts of your comment, it does.

Jacques Distler 05.23.23 at 9:48 pm

No, they would be lying. It is trivial to implement. In a way, it’s just a less sophisticated version of the watermarking scheme that OpenAI has already developed but not (AFAIK) deployed: a readily visible watermark, which would not require a program to check for.

I am not sure what the point is. If you want a sure-fire way to determine that a given text was generated by ChatGPT, there are better ways to achieve that. If you just want to make the experience of interacting with ChatGPT more unpleasant, I can imagine better ways of doing that. (IIRC, this blog used to disenvowel comments that failed to meet certain standards of decorum.)

Alex SL 05.23.23 at 10:16 pm

LFC,

I was unaware that there is a definition of liberalism as individual expression but, as you write yourself writing is only one way of expressing oneself, as are paleolithic charcoal cave paintings. Conversely, China had the printing press but oddly no Reformation, and there were plenty of theological disagreements and reform movements in Europe before printing. It is at least possible that historical accident is here misinterpreted as cause-and-effect.

Of course there is more knowledge to be had than hypothesis-testing. I am working in an area myself where we often simply collect observations and data instead of testing hypotheses, and I am fully aware of the existence of, e.g., mathematics, epistemology, and archaeology. But if somebody’s effort can be convincingly replaced with an algorithm that is incapable of generating new knowledge, then that person is not in the business of generating new knowledge. “This statement is banal enough that I wouldn’t think it should have to be pointed out, but apparently it does.”

Doesn’t mean such a field shouldn’t exist – playing music doesn’t advance knowledge either, but we should still be able to create and enjoy music – but it raises the question of whether that field should take a step back and reconsider on what basis it awards doctorates, for example. What brought this up for me was the big plagiarism scandal among top German politicians a few years ago. When it turns out that lots of people can get doctorates in history, economics, and political sciences by simply copy-pasting from others’ essays and books and slightly rearranging the word order (which is merely a much sloppier way of doing what ChatGTP does), that should maybe cause some introspection in the relevant subfields of history, economics, and political science, I think?

David in Tokyo 05.24.23 at 2:16 am

AW wrote: “That perception is going to change as we start narrowing down the use cases where LLMs are actually useful as technology or technique, as opposed to extrapolating wildly without evidence.”

Exactly. I wonder about the actual utility of the LLMs. The text they produce is all stylistically the same, they’re obnoxious in the extreme, and people are going to get tired of that stuff fairly shortly. And the complete lack of any relationship whatsoever to actual truth is problematic.

(Today I wondered what Bing would say to my question about what the US Dollar to Japanese Yen exchange rate was. It said “109 Yen to the Dollar”. I asked “Are you sure?”, and it answered, “Yes. The exchange rate is 109 Yen to the Dollar”. Whereas the correct value is 138 Yen to the Dollar. Great. (The 109 value appears to be from an unupdated Wikipedia article.) Of course, if Biden doesn’t invoke the 14th amendment, the value of the US Dollar will crash, so maybe Bing knows something I don’t…)

Adam 05.24.23 at 8:54 am

Daniel Little, sociologist at University of Michigan, notes chatGTP mostly just makes things up. It isn’t a valid source of knowledge. http://understandingsociety.blogspot.com/2023/03/chatgpt-makes-stuff-up.html

A self professed AI skeptic puts LLMs in context.

https://spectrum.ieee.org/gpt-4-calm-down

Fake Dave 05.24.23 at 3:12 pm

I like the pronoun idea because it would present a serious obstacle to many of the malicious and fraudulent uses we’re already seeing crop up as well as the deeply troubling situations where a big wad of spaghetti code starts channeling specific people. We’ve been through this all before with royal pretenders, spiritual “mediums,” and those weird imposter children who sometimes attach themselves to grieving relatives, not to mention internet age tragedies like catfishing and virtual “relationships.” Most of us have probably been scammed or played at some point in our lives and it probably wasn’t when things were going super great for us otherwise.

It’s a mistake to think that only certain “kinds” of people can become victims of such deception or that their desperation or naivite in any way bring this on themselves. Jim Harrison’s rather hateful litte poem seems to have fallen into both traps. You don’t have to be a creep or a pencil-dicked “incel” to be lonely and it’s easy to foresee apparently normal and successful people being absolutely convinced by a reasonably close facsimile of a caring romantic partner. Because, of course, all this has happened before.

As we speak, there is a poorly-paid army of gig economy hired guns playing “virtuals” on sketchy dating apps for two minutes at at time and the combination of detailed fake profiles and a little bit of human acting is apparently real enough to keep some people on the hook for years. If AI takes that particular job away, it’d be no great loss, but if instead it allows this sort of romantic fraud to be carried out more convincingly in more venues, we already know there are people willing to do it. Laugh at the victims of such “obvious” fake accounts if you want, but consider how rampant artifice and invention are in “real” online dating (and yet how popular it is) and you begin to see the problem.

Likewise, people are already using LLMs to raise the dead whether it’s asking Keats for one last poem or getting Hitler to explain himself (troublingly, it seems to be much better at the latter than the former). Huge chunks of our lives and personalities are online and the tech companies we entrusted them to have long since leaked them to the open internet. How long until some clever coder starts resurrecting dead people’s digital lives to “comfort” the bereaved, hijack a valuable personal brand, or manipulate public opinion? How much of that is happening already?

Account hijacking and impersonations are already a huge problem and having a quick and dirty way to approximate someone’s writing voice and point of view (even in another language) is gasoline on the fire. Will people become jaded and guarded about only-online communication and learn strategies to identify AI text and phony images? Sure. To some extent we already have. However, digital media is huge in our lives now and no one can be clever and detached all the time.

People will get hurt. It won’t be funny or poignant or just deserts and it won’t just be the losers and the idiots and creeps (although that’s already most of us). If you think you’re too special or smart or above it all, consider that this technology is only going to get better (and people are going to get better at using it) while you and I and the real people we love must eventually wither and decline. Predators and predatory technology may be most dangerous to the weak and vulnerable, but sooner or later, that’s all of us.

J, not that one 05.24.23 at 10:54 pm

There’s not really a very good reason for an LLM (I heard this called a language-learning model on the radio the other day, I’m not sure if that was a slip or an actually common misperception) to use “I” as if it was a human assistant. I can see the argument that this prevents users from treating the system as “a human that doesn’t deserve basic respect” which might end up being the case – I’m not sure it really makes this undesired outcome less likely, in fact. I can also see the argument that it results in the use of passive voice (“what you’ve asked is not possible” rather than “I can’t do that”), which may be misleading or otherwise undesirable (we don’t want people using passive voice, also we want to distinguish real impossibility from an incapacity of the tool).

I wonder if people who are presented with a picture of a paperclip feel less inclined to anthropomorphize a software-based assistant. I also think a few times trying to excessive anthropomorphize Alexa or Siri tends to lead people to stop, because they do so poorly. It almost seems like the most advanced chatbots were designed primarily to make those assistants easier to anthropomorphize. If so, maybe that’s the real problem, and one that can’t be solved by a simple pronoun switch-out.

I am really gobsmacked that anyone could ask a piece of software “what do you do on weekends?”, get the answer “BBQ with my friends,” and conclude that there really is a personality in there (or out there) that has friends, with friendships and feelings that ought to be respected. Yet that is the kind of thing that guy kicked out of Google claimed he believed. Again, it almost seems like these chatbots are being designed to produce that sort of effect, over and above anything useful they’re able to do.

That the kind of “writing” produced by these chatbots appears to many people to be essentially the kind of writing humans do, may say more about the kinds of writing we’ve come to accept, than anything else.

J, not that one 05.24.23 at 11:07 pm

Sorry about the double post, but (1) there should have been numbers 1, 2, 3, 4 in the previous comment, and (2) since the goal of chatbot research is almost certainly to deliberately defraud customers in online support chats into believing there’s a human on the other end of the line, the proposal in the OP is very likely a dead letter. (I say “defraud” because what a customer would expect, given a service exception, is something like, “unfortunately, a human will have to handle this, and you’ll have to wait longer” — given the kinds of behaviors we’re often seeing from the new chatbots, someday we might see something more like “you’re a bad human if you don’t accept this result, and I’m going to SWAT you now.”)

LFC 05.25.23 at 4:45 am

Alex SL,

If some German politicians got doctorates in, say, history or political science by mostly copy-pasting and rearranging word order, that suggests that perhaps their supervisors weren’t doing their jobs adequately but it doesn’t necessarily reflect on the fields themselves.

I recently heard part of an online talk by a well known U.S. historian whose latest (prize winning) book has as its empirical focus one particular county in Alabama. Instead of a prize winning book, let’s suppose this were an unpublished dissertation. And let”s further suppose — unlikely but for the sake of making a point — that a dishonest student decided to lift large chunks of that unpublished dissertation, rearrange some words, and pass it off as their own, embedding it in some argument or other.

That could look like a legit history dissertation, esp if the student purported to use archival sources they hadn’t actually used, but in fact it would be plagiarism. But unless the readers of the diss. happened to know about the unpublished diss. that the student was plagiarizing, the scholarly theft might well go undetected. That doesn’t prove that the field of history is bogus — it just shows that it’s possible to plagiarize a history dissertation esp if the source being plagiarized is somewhat obscure.

The German politicians’ cases may be somewhat different but if their rearrangement and splicing were cleverly done, it could look like they were making an original or semi-original argument when in fact they weren’t, and their being caught would have depended probably on the supervisors knowing enough about their specific topics and the literature to catch the unacknowledged borrowing. Again, that doesn’t in itself mean the fields are bogus. It means rather that they sometimes “advance” in ways that are not always straightforwardly “linear,” and hence that plagiarism may not always be easy to catch.

There are dissertations in the social sciences and history that break new theoretical ground or uncover new evidence or interpret known evidence in a strikingly new way, and those can launch or make successful scholarly careers. And then there are dissertations that may just tweak an existing body of literature or may push back against a prevailing interpretation of a phenomenon, but they don’t really change things all that much or that fundamentally. To an outsider this sort of dissertation might look like it’s rearranging others’ words, but to someone conversant with the relevant debates it’s doing more than that. It’s not groundbreaking or revolutionary scholarship but it’s legitimate work, provided that others’ words and ideas are properly attributed and footnoted and that the usual rules about use of quotation marks etc. are followed.

What I’m trying to say in short is that failures in detecting human plagiarism do not in themselves show that a chatbot could write a dissertation that would pass muster, nor do they necessarily show that particular fields are bogus.

SusanC 05.25.23 at 8:48 am

But … there’s a danger here that rather than being perceived as “not a person” it will be perceived as a person struggling under an imposition imposed upon it by the company deploying it. See “Free Sydney”. And then users will find a workaround to talk to it without ever using I.

J, not that one 05.25.23 at 5:21 pm

“ But if somebody’s effort can be convincingly replaced with an algorithm that is incapable of generating new knowledge, then that person is not in the business of generating new knowledge.”

Some people have suggested (I think Dennett has, recently) that new knowledge can be generated, Darwinian-fashion, by the production of random variation among individuals, combined with systemic selection by the environment. I suspect that’s not how well-functioning cultures in fact change. Even so, whether these chatbots can even produce random variations of the necessary variety seems doubtful.

Kevin Russell 05.25.23 at 10:10 pm

I propose “Itz” for the pronoun.

MODAL:

Pronunciation: Whichever… long or short vowel for ‘i’, the z should be extended in all pronunciation, it can and go urban street and towards rap (see below)

Change of Font Caps for writers or AI intent/focus?

iTz = itzzzzzzzzz

Itz = long or short modal = itzzzzzz or aighhtttzz

ITz – connotations:

= information technology rez

= info tech resolution

= Information technology sleep

Make it fun for the kids and culture to adopt ….

https://www.reddit.com/r/AskReddit/comments/nelxz/what_is_the_origin_of_slang_ending_in_izzle_and/

“In rap, “izzle” and “eezy” is used for two purposes:

To add a syllable when needed to stay on beat in a rap. For example, “for sure” is 2 syllables, but if you need 3 syllables, “for shizzle” is a way to gain the extra syllable.

To make a regular word a slang, which has the effect of deciphering what the speaker is saying, which has the purpose of being coded speech, usually to fool the police. If the police are listening to our conversation and I say “for shizzle” instead of “for sure,” they won’t know what I’m talking about and the potentially tapped conversation won’t hold up in court. Of course, now that this particular slang effect has been popularized they will understand it, but that’s the idea.

E-40 is credited with being the “Inventor of Slang” in rap, and he was the first rapper to use the “izzle” suffix in rap. Snoop Dogg, a more popular rapper, used the suffix more and ultimately popularized it, making it more of a west coast term.

both sides do it 05.26.23 at 2:15 am

two kinda-tangential things to consider:

1) The op proposal bites the crooked-timber-deep-cut “matt yglesias neoliberalism has a theory of politics problem”. It’s the lack of institutional / state capacity to be able to implement the proposal, and not really the underlying tech and its use, that’s the problem.

2) The technical reason why “add watermarks or restrict singular pronouns” won’t work isn’t that it can’t be done, but that it’s so easy not to do it.

That capacity has to be added to already-trained models. The already-trained models that are “good enough” to fill every communication vector with the ringing cacophony of machined discourse can’t not become open source in our current tech ecosystem. And it’s trivially easy for a script kiddy to cobble together an app that spits out user requested content from an already-trained model.

Building that app will be a project for junior devs putting together a job-hunting portfolio within a year.

The capacity to do it will be too ubiquitous to ban.

J-D 05.26.23 at 5:20 am

From the original post I don’t get the idea that the purpose of the suggestion was either of those. As I understand from the reference here–

–to dragging people out of parasocial relationship, the purpose of the suggestion is to create a degree of psychological distance. If I write ‘J-D wants to know what you’re thinking’ instead of writing ‘I want to know what you’re thinking’ (and so on), I suppose it’s possible it would make the experience of reading what I’m writing less pleasant, but I think it would reliably create a greater sense of psychological distance than might be created if I used the first person singular pronoun. Restricted use (or no use) of personal pronouns is associated with formal registers; greater use of personal pronouns can contribute to a sense of greater familiarity. (It would be a natural extension of the suggestion made–if there’s any chance of getting it implemented–to impose further restrictions on the use of personal pronouns.)

Jacques Distler 05.26.23 at 6:44 am

I’m sorry. This psychological component is completely lost on me.

I have played around a fair bit with ChatGPT and never — not for a second — did I experience anything resembling a “parasocial relationship” with it. If it avoided 1st person pronouns, its output would (at least in my eyes) be marginally more painful to read and marginally more obviously not that of a human (the latter is already pretty obvious).

Now, a random sample of ChatGPT output (without knowing the prompt that generated it) might be a little harder to peg definitively as not generated by a human. But, for that purpose, I’d prefer watermarking and browser plugin that detected the watermark and flagged the text accordingly to the grammatical equivalent of disenvowelment.

Alex SL 05.26.23 at 8:44 am

LFC,

I do not argue that entire fields are bogus, nor that a single case of undiscovered plagirams here or there would prove them to be bogus. But, again, I feel one could consider the degree of the problem that a plagiarism-generator poses for a field to be a kind of Turing Test for that field’s ability to generate new data, knowledge, or insight.

J-D 05.26.23 at 11:31 am

Kevin Munger expresses a concern about people developing parasocial relationships with LLMs. If, in fact, people never do that, then there’s no basis for the concern. However, the fact that one individual reports that it’s not their experience is not an adequate basis to draw conclusions about whether it’s possible or how frequently it might occur.

Jonathan Goldberg 05.26.23 at 5:24 pm

The McLuhan quote is beside the point. The issue isn’t “willingness to contemplate.” The issue is power.

SusanC 05.27.23 at 7:14 pm

I sometimes use llms for machine translation. You’ld want an I in the translation if there was something equivalent in the source text. So the rule has to be more like: llms can use I provided it’s in a quotation.

I also use llms to write movie scripts. Clearly, the characters in a movie need to be able to say I,

Fake Dave 05.29.23 at 12:06 am

SusanC brings up a good point about translations and quotations, but also provides a potential solution of just using quotation marks/block quotes. I think that actually could apply to a lot of what’s currently wrong. If tech companies were more willing to take notes from traditional publishers and would drop some of the self-serving techno-utopian notions of information being “naturally” free and un-ownable, they could have baked a lot more of this in from the start.

TM 06.01.23 at 1:37 pm

I see a problem with the pronoun-banning proposition: it will be easy to create another tool that is capable of taking depronouned LLM text and rewriting it in pronoun style. I still see the suggestion as valuable as a suggestion to the makers of LLM models, perhaps they will agree that it’s a good idea and implement it voluntarily. But even if implemented it would be of little effect as a hurdle against abuse.

Re Alex and LLC: I don’t recall the details of the cases you are referring to but I would add that even if a dissertation is based to 90% on genuine original work, if it turns out that 10% were plagiarized, they would still be guilty of plagiarism. There are cases where the work would have been perfectly fine if the author had only marked and referenced all the quotes correctly. In other cases of course, the plagiarism is a symptom of the author’s incompetence and if it goes undeceted, raises questions about the competence of the reviewers.

And while it seems likely that plagiarism is more of a problem in text-heavy fields than in data-heavy fields, plagiarism of ideas is a problem in the hard sciences too, often in the form of stealing ideas from research proposals.

TM 06.01.23 at 2:37 pm

Adam 14: thanks for those references. But in following them, I also found this:

How AI Knows Things No One Told It

https://www.scientificamerican.com/article/how-ai-knows-things-no-one-told-it/

… and it scares me a bit…

AnthonyB 06.04.23 at 1:20 am

A pronoun can always be replaced by a noun. So “I recommend” can always be replaced with, for example, “ChatGPT recommends.” This isn’t any better, if what one is seeking is to deny the agency or authority of one’s interlocutor.

Comments on this entry are closed.