There are many processes now subsumed under the term “Artificial Intelligence.” The reason we’re talking about it now, though, is that the websites are doing things we never thought websites could do. The pixels of our devices light up like never before. Techno-optimists believe that we’re nowhere close to the limit, that websites will continue to dazzle us — and I hope that this reframing helps put AI in perspective.

Because the first step in the “Artificial Intelligence” process is most important: the creation of an artificial world in which this non-human intelligence can operate.

Artificial Intelligence is intelligence within an artificial space. When humans act within an artificial space, their intelligence is artificial—their operations are indistinguishable from the actions of other actors within the artificial space.

Note that these aren’t arguments; they’re definitions. This territory is changing so rapidly under our feet that we need to be constantly updating our maps. Most conversations about AI are simply confused; my aim in this essay is to propose a new formulation which affords some clarity.

One more definition: Cybernetics is the study of the interface between the human and the artificial—how we can interface with the artificial in order to harness it while retaining our agency. I’ve written before that cybernetics provides key insights from the first integration of computers into both social science and society, but reading these old texts demonstrates how rapidly the artificial has grown from the 1950s/60s to today. The earliest cybernetics centered human biology, our muscles and sensory apparatus, drawing from phenomenology; today, the computer/smartphone/digital interface is the focal point, our gateway to the artificial. Humans and the non-human world revolve around the screen; todays’ cybernetics requires a machine phenomenology.

Concerns about “alignment,” the mismatch between human values and their instantiation in the machine, are often addressed towards making the artificial world match the human world (real or ideal). I argue that this approach cannot succeed, due to human constraints in speed and information density. We simply cannot verify that these sprawling artificial spaces are aligned with our values, either inductively or deductively, for reasons related to the philosophy of science topics I usually blog about.

The most important first step towards a society with a healthy relationship to AI is to slow and ultimately limit the growth of the artificial. This will take active effort; the business model of “tech companies” involves enticing us to throw everything we can into their artificial little worlds. The entirety of the existing social world we’ve inherited and had been maintaining or expanding is like a healthy, vibrant ecosystem — and tech wants to clearcut it. Individual people can get ahead by accepting the bargain, making this a tragedy of the commons.

Metaphorical work is an essential if understudied methodological input to the process of social science. This is my primary intervention: AI is a whirlpool sucking the human lifeworld into the artificial. Social media is the most complete example: a low-dimensional artificial replacement for human sociality and communication, with built-in feedback both public (likes) and private (clicks). The objection function for which recommendation algorithms optimize is time on the platform, as Nick Seaver documents. These explicit goal of these platforms is literally to capture us.

The more we are drawn into these artificial worlds, the less capable we are as humans. All of our existing liberal/modern institutions are premised on other lower-level social/biological processes, but especially the individual as a coherent, continuous, contiguous self. Artificial whirlpools capture some aspect of previously indivisible individuals and pulls us apart — sapping energy from generative, creative, and regulative processes like social reproduction and democracy.

As we use our intelligence to navigate artificial spaces which record our actions, the more training data we provide to “artificial intelligence” which can then act at superhuman speed and scale in these spaces. The “artificial” is a replacement of the real world with an ontologically simple low-dimensional model, as formulated by Herbert Simon, James C. Scott, and other thinkers influenced by cybernetics.

Humans have always created artificial worlds, from cave paintings to mathematical systems, within which to perform symbolic operations and produce novel insights. The effective application of this process has granted humans mastery over the natural

world and, often, over other humans. Our artificial worlds representing of molecules, proteins, bacteria, stars – these are domains where AI can and should be applied, where it will help us more efficiently exploit the spaces we have created.

Every new result proclaiming how AI bests humans at some task is a confession that humans have already been reduced to some artificial aspect of ourselves.

But AI is being used to exploit artificial spaces which we have created to stand in for and manage humans. Every new result proclaiming how AI bests humans at some task is a confession that humans have already been reduced to some artificial aspect of ourselves.

Historically, the temporality of this process had been limited to that of the humans creating, manipulating, and translating these artificial worlds. The modern bureaucratic state constructs artificial representations of its citizens in order to govern them. This is technocratic; democratic oversight is supposed to skip straight to the top. There are human intelligences coming up with the categories and deciding how to interact with them. Democratic politics is the means by which we change these categories and relations.

As more inputs to the artificial worlds became machine-readable, the expansion of the AI process began to transcend human temporalities, constrained as they are by biological realities of the life cycle. Postmodern bureaucracy does not rely on human intelligence to define the ontology of the artificial space; machines induct the relevant categories from ever-growing streams of data. Electoral democracy is thus unable to serve its regulatory function – postmodern, inductive bureaucracy is an Unaccountability Machine.

The most recent jump, the impetus for widespread discussion of AI, came not from LLMs themselves but from the introduction of the chat interface. LLMs were made possible by huge data inputs in the artificial world of text, and by advances in the statistical technology of machine learning. But it was the human-world hookup that proved key for widespread adoption – and thus a slope change in the rate of the expansion of the artificial.

For the domains of human life which have already been made artificial, there is little hope of fundamentally stopping AI. There is no reason to expect humans to best machines at artificial benchmarks. Any quantified task is already artificial; the more humans perform the task (and, crucially, are measured and evaluated in performing the task), the better machines can optimize for it it. The process is brutally fast and efficient, a simple question of data flows, the cost of compute and perhaps (under)paying for some human tutors for RLHF.

Empirical and technical approaches to AI are fighting on an important but ultimately rearguard front: within the artificial domains which we have already constructed and linked back into society, the goal is to ensure that the machine’s objective function takes into account whatever democratic/human values we want to prioritize. Perfect alignment is impossible, but with blunt instructions we can insist on alignment on the handful of dimensions we think are most important.

The collision of 250 year-old electoral institutions with contemporary technology and culture has rendered democracy itself artificial. Horse-race media coverage, scientific polling, statistical prediction, deregulated campaign finance and A/B tested campaigning – these technologies of the artificial have come to stand in for democracy. But democracy is a process that requires humans in the loop—for it to function well, it requires that humans be the loop—which has been rendered ineffective because of the explosion of the artificial.

Democratic freedom must be rebuilt, starting from the small and expanding outward. John Dewey’s criticism is more relevant than ever: “we acted as if our democracy… perpetuated itself automatically; as if our ancestors had succeeded in setting up a machine that solved the problem of perpetual motion in politics… Democracy is…faith in the capacity of human beings for intelligent judgment and action if proper conditions are furnished.”

Concerns over citizens’ intelligent judgment have accelerated a technocratic/populist divide in our political culture. The technocratic solution is to constrain citizens’ choices, lest they make the wrong one. The populist solution is to deny and demonize the very concept of intelligent judgment.

But the fundamental problem is that our increasingly artificial world does not furnish human beings with the proper conditions. Democratic freedom is a creative freedom, the freedom of integral, embodied human beings interacting within communities, rather than “dividuals” fragmenting themselves into artificial environments.

We must reject the narrative that people or societies must feed ever-more of themselves into the whirlpool of the artificial to remain “competitive.” The more we optimize for metrics in artificial realms, the farther these realms drift from human reality. Populist politics, climate change and falling birthrates demonstrate the fragility of societies that have become too narrowly optimized.

We must instead recommit ourselves to each other – to reaffirm our faith in democracy as a way of collective problem-solving. Each of us has to work towards being worthy of that faith, and to construct small pockets of democratic human activity to expand outwards, rather than letting ourselves be dragged apart into the various artificial whirlpools which have adopted the mantle of progress.

(This the first in a series of posts that aim at redescribing the present. Next week I will discuss the inversion of the business cliche that you should aim to sell shovels during a gold rush.)

{ 23 comments }

MisterMr 10.21.24 at 2:51 pm

You compare “human reality” to “artificial reality”, but then all cultural and legal realities are artificial (as you already imply when you speak of bureaucracy) so what is “human reality” really?

Only the phisical part of reality that we can directly perceive with our senses?

But if we lived like this we would live in a really limited world, like a cat.

Our “humanity” really consists in the ability to live in this kind of “artifical worlds”.

“a human is an animal who is featherless, biped, and lives in an artificial cultural world”: AI-ristotelesWilliam S. Berry 10.22.24 at 2:51 am

Based on a quick first read, I think I agree with the OP.

@MisterMr:

Nah.

I think that Kevin is talking about something much more fundamental than what you are seeing from the POV of the typical intellectually “etherealized” intelligence (same as all of us if we don’t keep our feet on the ground — or, at least, our heads above water).

It’s called “alienation”, and it’s an old-fashioned idea that’s been around for a couple of centuries. No progress in its amelioration has been made that we can discern. We mostly just don’t talk about it anymore (at least explicitly).*

Surrender to the artificial idealities (as against any kind of, you know, reality) of AI might not be the end of humanity. But it will be a radical acceleration of human alienation from the world we live in, so yes, finally, a possible end to one kind of humanity.

Anyway, whatever. I think I’m going to drink a couple glasses of a good Tempranillo I opened an hour ago.

And thnx to KM for this piece.

*I take alienation to be the separation of the human being from their world due to the imposition of artificial contrivance suitable for the purpose. In the case of the laborer, there is no choice but to accede to the requirements of Capital. The Christian (or other believer, assuming sincerity) creates their own alienated identity: A false hope and a handful of vaguely ascetic values define the “calumniators of the world” (as Nietzsche called them). Either way, it’s Death against Life, which ain’t no good thing, which is what finally matters when you get down tuit.

William S. Berry 10.22.24 at 8:06 am

I should have compounded my last two words as “downtuit”* (a coinage perhaps, but, alas, not likely an original one).

*Sort of by analogy to “aroundtuit”, “uptuit”, etc., or maybe even “intuit”.

Or something.

qwerty 10.22.24 at 4:18 pm

But I always thought that populism (“the populist solution”) is precisely a pro-democratic/anti-technocratic revolt of common sense of the common man. The concept of judgment that it denies and demonizes is precisely the technocratic concept of judgment.

Sashas 10.22.24 at 7:36 pm

I feel like a crazy person. Premises are important, and it feels like for a lot of what I’m reading lately I don’t even understand where their premises are coming from.

“websites are doing things we never thought websites could do” …like what? Genuinely, I am a computer science professor and I have no idea what you’re talking about. I’m not even saying you’re wrong necessarily, but can we get some examples?

“The pixels of our devices light up like never before.” Huh? I remember the Geocities era with its flashy backgrounds and vibrating banner ads, not to mention the auto-playing snippets of music.

“the first step in the “Artificial Intelligence” process is most important: the creation of an artificial world in which this non-human intelligence can operate.” I have three issues with this.

Your first claim is that the creation of this “artificial world” is part of the “Artificial Intelligence” process. I would like some sort of evidence to justify this claim. Counterpoint: Building something “the world is not ready for” is one of the top stereotypes of tech innovators. Getting the world ready for AI sounds like a fine idea, but I think the onus is on you to to offer some evidence that it is part of the process.

Your second claim is that the artificial world from claim 1 is the first step in the process. For the same reasons, this seems like even more of a stretch. Moreover if it’s the first step, shouldn’t we be seeing lots of evidence of it already? Can you point me to that evidence if so?

Your third claim is that this step is the most important. Leaving aside my belief that it isn’t happening and isn’t part of the process anyway, why is it important let alone the most important?

“Artificial Intelligence is intelligence within an artificial space.” Where does this definition come from? I’m a CS professor, and if you asked me to define AI I would first point out that AI is a broad blanket term that is used (and abused) to cover a wide variety of things, but that doesn’t mean I am cool with literally any definition. I can’t see myself accepting this definition from a student. You aren’t one of my students and I’m open to reframing terms in a philosophical setting like ours, but even adding in the rest of the paragraph I don’t feel like I really know what you even mean, and to the extent that I think I am able to guess I don’t see it as within acceptable bounds of what… words… mean…

I’m going to stop there for now, other than to say that this (“my aim in this essay is to propose a new formulation which affords some clarity.”) is a worthy goal and I hope you do more.

Charlie W 10.22.24 at 9:41 pm

“All of our existing liberal/modern institutions are premised on other lower-level social/biological processes, but especially the individual as a coherent, continuous, contiguous self. Artificial whirlpools capture some aspect of previously indivisible individuals and pulls us apart — sapping energy from generative, creative, and regulative processes like social reproduction and democracy.”

I like the piece and want to agree with it, but do wonder if this passage (along with other parts) imagines the historical individual as better than they were.

Kenny Easwaran 10.23.24 at 12:47 am

What does it mean to say “Artificial Intelligence is intelligence within an artificial space”?

I get it when I’m thinking about social media algorithms and search algorithms and LLMs and image generators and so on. But what is going on with self-driving cars, or robot dogs? In what sense are those “intelligence within an artificial space”? You can say that the self-driving car is in an “artificial space” because the roadways are an inherently artificial space, with all their traffic rules and stripes and reflectors and signs and so on – but humans have been intelligences operating in that space for at least 8 or so decades. And a Boston Dynamics robot dog doing a search and rescue in a forest fire doesn’t seem to be operating in an artificial space.

MisterMr 10.23.24 at 2:19 pm

So if I think of what could go wrong with AI, the first image that goes to my mind is that of some single male (most likely male) emotionally unbalanced, who falls in love with a virtual character who appears realistic because it is “powered” by a chat-GPT like bot.

Perhaps this is what the OP means by “artificial world”, I’m not sure.

The “wrongness” of my example comes from the fact that we (humans) have a part of the brain that works emotionally, those emotion have a biological base (for example “love” for a partner is intermingled with sexuality), and as the AI can pretend to be umans they can make these emotion “misfire” relative to what can be assumed to be an healthy normal behaviour.

But apart from the difficulty to define what is a “healthy” behaviour, this kind of problem only works because the AI can offer a very realistic “illusion”, not because it is actually an artificial intelligence (that arguably it isn’t).

The same problem would happen if during an election campaign someone paid to have a lot of very active AI-powered chatbots that inundate social media with political memes: this would be a problem (perhaps will be a problem), but it is on par with deep fake, or with few people owning most mass media.

David in Tokyo 10.23.24 at 3:56 pm

I kinda like the ““Artificial Intelligence is intelligence within an artificial space” bit.

It points out that LLMs don’t do reality, don’t do logic, don’t do reasoning. (Really, they don’t. They are statistical next word guessing programs (on steroids, admittedly) that operate on undefined tokens.) So it’s a completely bogus space that they operate in.

But that’s not what you meant.

As someone who spent quite a bit of time in the field, albeit long ago, I’m not amused, impressed, or even interested in the current round. It’s complete BS.

IMHO, taking the current round of AI seriously (i.e. philosophically) is a fool’s game. A less foolish thing would be assuming that someone had done AI “right”, and that it actually honestly did the work about reasoning about the world and building internal models of the world and busting its butt to keep that internal model consistent and correct. And then ask what propertiess that system must have. That might be of intellectual interest. But it would require a lot of work. The really really really lot of work to figure out how people do that. We are nowhere near understanding how people build and use their internal modles of the world.

But today’s AI Bros don’t like doing work. Their religion is that doing an insanely large number of stupid computations might possibly result in “intelligence” appearing as an emergent phonomenon, that’s their holy grail. This is, of course, naive and silly.

Ghostshifter Runningbird 10.24.24 at 10:19 pm

Something lots of websites do is render nicely on tablets, desktops, and phones. It would be nice if this scaled automatically on my phone, at least always asking if I want to view the site in simplified mode would be great. I never thought it was impossible for Crooked Timber to be difficult to read on a phone.

dk 10.25.24 at 6:53 am

As someone who’s built quite a few “AI” systems, I can say that this definition

was where I stopped reading. Reminds me of Feynman’s famous remark, “Philosophy of science is as useful to scientists as ornithology is to birds.”

Bob 10.26.24 at 2:34 am

What dk said at @11.

Ikonoclast 10.26.24 at 6:19 am

Einstein, it seems, had a different view to dk.

“I fully agree… about the significance and educational value of methodology as well as history and philosophy of science. So many people today—and even professional scientists—seem to me like somebody who has seen thousands of trees but has never seen a forest. A knowledge of the historic and philosophical background gives that kind of independence from prejudices of his generation from which most scientists are suffering. This independence created by philosophical insight is—in my opinion—the mark of distinction between a mere artisan or specialist and a real seeker after truth.” (Einstein to Thornton, 7 December 1944, EA 61–574).

Footnote: I don’t think Feynman meant his quip to be taken too seriously. It is easy to see it is based on a category mistake which segues into an incongruity, a false equivalence. That is what creates the humour. It is a nice wisecrack but it is not a logical argument.

Ikonoclast 10.26.24 at 11:08 am

Several commenters above had trouble understanding or accepting these “definitions” by Kevin Munger. It is an arresting formulation but on reflection it seems clear.

“Artificial Intelligence is intelligence within an artificial space. When humans act within an artificial space, their intelligence is artificial—their operations are indistinguishable from the actions of other actors within the artificial space.”

Munger’s claim that these are “definitions” is too modest in my opinion. I think they are more than definitions. I think they are empirically based category descriptions. Munger might disagree with me on this of course. My contention hinges on the given idea of “artificial space”: a purely formal computational, computed and virtual space made by the system (of hardware, firmware and software) made by humans for modelling and control purposes. This modelled place is really nothing like any real space outside of its very limited homologous modelling of real dimensions, real objects and real actors.

As well as modelling a sad fraction of “is”, the artificial virtual space incorporates a whole lot of “ought”. The human user is the object of much rapidly computed manipulation in the virtual space. You ought do this. You ought think that. You ought want everything. You ought fear the emanations of the virtual verities. Virtual verities can be no verities at all, of course. In that zone, nothing is “but thinking makes it so”.

crown vic virus 10.26.24 at 12:05 pm

…but despite this, a few tentative rules might be adduced for the suicidal

traveller to the Twisted World:

Remember that all rules may lie, in the Twisted World, including this rule

which points out the exception, and including this modifying clause which

invalidates the exception … ad infinitum.

But also remember that no rule necessarily lies; that any rule may be true,

including this rule and its exceptions.

In the Twisted World, time need not follow your preconceptions. Events may

change rapidly (which seems proper), or slowly (which feels better), or not at all

(which is hateful).

It is conceivable that nothing whatsoever will happen to you in the Twisted

World. It would be unwise to expect this, and equally unwise to be unprepared

for it.

Among the kingdoms of probability that the Twisted World sets forth, one

must be exactly like our world; and another must be exactly like our world

except for one detail; and another exactly like ours except for two details; and so

forth. And also – one must be completely unlike our world except for one detail;

and so forth.

The problem is always prediction: how to tell what world you are in before

the Twisted World reveals it disastrously to you.

In the Twisted World, as in any other, you are apt to discover yourself. But

only in the Twisted World is that meeting usually fatal.

Familiarity breeds shock – in the Twisted World.

The Twisted World may conveniently, (but incorrectly) be thought of as a

reversed world of Maya, of illusion. You may find that the shapes around you

are real, while You, the examining consciousness, are illusion. Such a discovery

is enlightening, albeit mortifying.

A wise man once asked, ‘What would happen if I could enter the Twisted

World without preconceptions?’ A final answer to his question is impossible; but

we would hazard that he would have some preconceptions by the time he came

out. Lack of opinion is not armour.

Some men feel that the height of intelligence is the discovery that all things

may be reversed, and thereby become their opposites. Many clever games can be

played with this proposition, but we do not advocate its use in the Twisted

World. There all doctrines are equally arbitrary, including the doctrine of the

arbitrariness of doctrines.

Do not expect to outwit the Twisted World. It is bigger, smaller, longer and

shorter than you; it does not prove; it is.

Something that is never has to prove anything. All proofs are attempts at

becoming. A proof is true only to itself, and it implies nothing except the

existence of proofs, which prove nothing.

Anything that is, is improbable, since everything is extraneous, unnecessary,

and a threat to the reason.

Three comments concerning the Twisted World may have nothing to do with

the Twisted World. The traveller is warned.

FROM The Inexorability of the Specious,

BY ZE KRAGGASH; FROM THE MARVIN FLYNN MEMORIAL COLLECTION.

J-D 10.27.24 at 6:56 am

Spike Jonze had this story idea two decades ago and made it into a film (Her) one decade ago.

David in Tokyo 10.27.24 at 6:58 am

“intelligence within an artificial space.”

The problem with this definition is that if you look at the current round of AI, there’s no “I” there. LLMs operate on sequences of undefined tokens (and are (hyper-glorified) statistical pattern matchers whose outputs have no relationship to the real world) and “neural nets” are a class of SIMD computation that’s useful for doing gradient descent in multidimensional spaces, some pattern recognition sorts of things, and maybe some other stuff, but other than being a computational model*, it’s completely unrelated to (i.e. has absolutely no similarities whatsoever to) any neural system in any known animal. To quote Jerry Fodor, “The Mind Doesn’t Work that Way”. He was wrong then, but he’s right this time. (Fodor argued that the mind does things that look like symbolic computations but are way way better. We humans do reason about things and concepts, and that reasoning sure looks like symbolic computation, so his argument is arguable, but we AI types never figured out how to do symbolic computations anyway near as well as the mind does. But if you don’t have concepts to reason about (which current AI doesn’t) you can’t get anywhere near having an arguement with Fodor.)

Whatever. Like Roger said “There’s no such thing as AI”.

*: The Church-Turing thesis tells us that all computation systems are equivalent; a given function is either computable by every computer, or computable by no computer.

J-D 10.27.24 at 7:02 am

I’m not sure whether ornithology is entirely useless to birds, because they might benefit from the actions of pet owners, veterinarians and conservationists who are informed by ornithology; but even if it were the case that ornithology is entirely useless to birds, it would not follow automatically that ornithology is entirely useless. (I am confident that Richard Feynman understood this and was not in favour of ornithology being abandoned as useless.) Likewise, even if it were the case that philosophy of science is entirely useless to scientists, it would not automatically follow that philosophy of science is entirely useless.

J-D 10.27.24 at 7:08 am

Evidently there’s a good deal of difference between you and me: which doesn’t have to be a bad thing, because it takes all sorts to make a world. When I read things and struggle to understand them, the first hypothesis that occurs to me is not that the balance of my mind is disturbed but rather that the writers have not expressed themselves clearly. I work hard at expressing myself clearly but I know I fall short of 100% success and always will; why shouldn’t it be common for other people, also, to fail to express themselves clearly?

J-D 10.27.24 at 10:56 am

We used to have a commenter here who went by the screen-name Ze Kraggash, after the character from the Robert Sheckley novel Mindswap, which makes it amusing to have a long quote from the novel posted here all this time later.

David in Tokyo 10.27.24 at 3:38 pm

“Likewise, even if it were the case that philosophy of science is entirely useless to scientists,..”

FWIW, I follow the “Not Even Wrong” blog, which argues that the blokes doing “string theory” have completely lost it. (Seems right to me: string theory isn’t a theory, it’s an infinite number of possible theories (but the math’s too hard to actually stipulate any of those theories), and the multiverse idea is seriously ridiculous.) And Michio Kaku wrote a book that’s completely wrong about everything it says.

https://scottaaronson.blog/?p=7321

So it sure looks to me as though the theoretical physicists are desperately in need of some philosophy of science.

Also, there was an article in Science on what we currently think about the evolution of langauge, but it admitted that every attempt at teaching “language” to other animals has failed to create animals that say anything different from what they were saying before with their non-linguistic sounds. So these guys also need some help from philosophy of science and language, although this collaboration might actually be productive.

alfredlordbleep 10.28.24 at 1:00 am

Ikonoclast 10.26.24 at 6:19 am

Progress in argument from authority: Einstein

Thanks.

Kevin Munger 10.28.24 at 11:44 am

Thanks for all the engagement with this!

Sashas @5 — The first two passages you quote are meant to be an ironic dismissal of LLMs/generative AI: there’s a ton of buzz but really they’re just websites. The main thing that these websites do is show us pixels.

I agree that what I wrote doesn’t make sense from existing premises. That’s why I tried to make it clear that “these aren’t arguments — they’re definitions.” These are my premises. This is an effort at redescription, in Rorty’s framework.

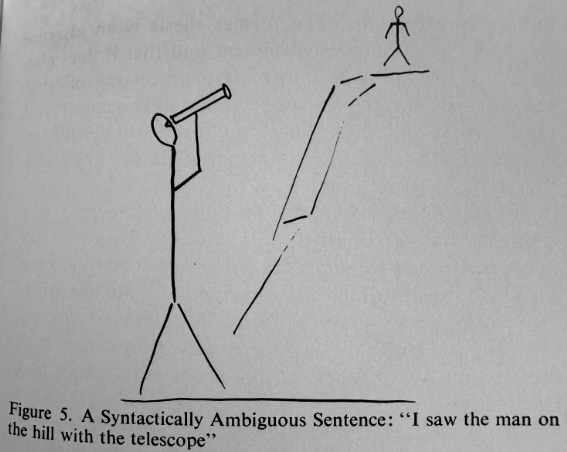

I don’t think I’ve nailed it yet! But I’ll be continuing to work through this framework. Many ppl had questions about what I mean so I probably need to explicate this better. Take the example of “playing chess.” If by “playing chess” we mean sitting in a room with another human and physically moving pieces of wood around, then computers still can’t play chess (and never will, by definition). If by “playing chess” we mean “taking actions within the symbolic space according to rules of the game”, it’s unclear to me how we can differentiate human and non-human intelligence. Our action space is radically reduced (bc this is an artificial space). It’s not clear that anything that can be done with a rook is distinctively human.

Ikonoclast @14 — I appreciate this. Perhaps “definition” is indeed too weak to describe what I’m trying to do here. But following what I wrote above — I don’t think that “artificial” has to be computational. Modernist paper-driven bureaucracy is artificial; so is chess; so are GPAs and SAT scores.

To everyone who thinks philosophy of science is useless — not sure why you’re wasting your time reading my blog ¯_(?)_/¯

Comments on this entry are closed.