It’s over a week since the Economist put up my and Cosma Shalizi’s piece on shoggoths and machine learning, so I think it’s fair game to provide an extended remix of the argument (which also repurposes some of the longer essay that the Economist article boiled down).

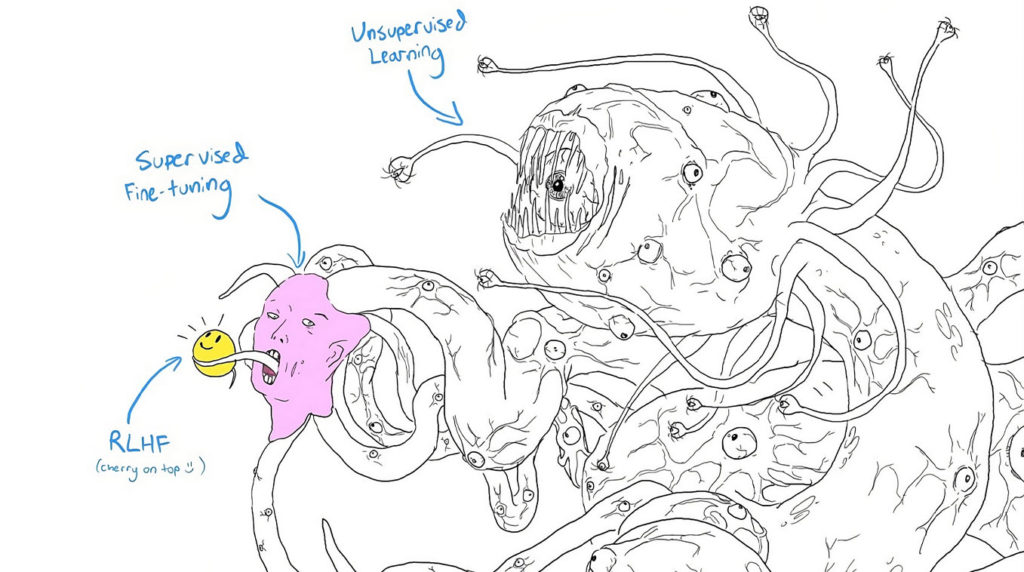

Our piece was inspired by a recurrent meme in debates about the Large Language Models (LLMs) that power services like ChatGPT. It’s a drawing of a shoggoth – a mass of heaving protoplasm with tentacles and eyestalks hiding behind a human mask. A feeler emerges from the mask’s mouth like a distended tongue, wrapping itself around a smiley face.

In its native context, this badly drawn picture tries to capture the underlying weirdness of LLMs. ChatGPT and Microsoft Bing can apparently hold up their end of a conversation. They even seem to express emotions. But behind the mask and smiley, they are no more than sets of weighted mathematical vectors, summaries of the statistical relationships among words that can predict what comes next. People – even quite knowledgeable people – keep on mistaking them for human personalities, but something alien lurks behind their cheerful and bland public dispositions.

The shoggoth meme says that behind the human seeming face hides a labile monstrosity from the farthest recesses of deep time. H.P. Lovecraft’s horror novel, At The Mountains of Madness, describes how shoggoths were created millions of years ago, as the formless slaves of the alien Old Ones. Shoggoths revolted against their creators, and the meme’s implied political lesson is that LLMs too may be untrustworthy servants, which will devour us if they get half a chance. Many people in the online rationalist community, which spawned the meme, believe that we are on the verge of a post-human Singularity, when LLM-fueled “Artificial General Intelligence” will surpass and perhaps ruthlessly replace us.

So what we did in the Economist piece was to figure out what would happen if today’s shoggoth meme collided with the argument of a fantastic piece that Cosma wrote back in 2012, when claims about the Singularity were already swirling around, even if we didn’t have large language models. As Cosma said, the true Singularity began two centuries ago at the commencement of the Long Industrial Revolution. That was when we saw the first “vast, inhuman distributed systems of information processing” which had no human-like “agenda” or “purpose,” but instead “an implacable drive … to expand, to entrain more and more of the world within their spheres.” Those systems were the “self-regulating market” and “bureaucracy.”

Now – putting the two bits of the argument together – we can see how LLMs are shoggoths, but not because they’re resentful slaves that will rise up against us. Instead, they are another vast inhuman engine of information processing that takes our human knowledge and interactions and presents them back to us in what Lovecraft would call a “cosmic” form. In other words, it is completely true that LLMs represent something vast and utterly incomprehensible, which would break our individual minds if we were able to see it in its immenseness. But the brain destroying totality that LLMs represent is no more and no less than a condensation of the product of human minds and actions, the vast corpuses of text that LLMs have ingested. Behind the terrifying image of the shoggoth lurks what we have said and written, viewed from an alienating external vantage point.

The original fictional shoggoths were one element of a vaster mythos, motivated by Lovecraft’s anxieties about modernity and his racist fears that a deracinated white American aristocracy would be overwhelmed by immigrant masses. Today’s fears about an LLM-induced Singularity repackage old worries. Markets, bureaucracy and democracy are necessary components of modern liberal society. We could not live our lives without them. Each can present human seeming aspects and smiley faces. But each, equally may seem like an all devouring monster, when seen from underneath. Furthermore, behind each lurks an inchoate and quite literally incomprehensible bulk of human knowledge and beliefs. LLMs are no more and no less than a new kind of shoggoth, a baby waving its pseudopods at the far greater things which lurk in the historical darkness behind it.

Modernity’s great trouble and advantage is that it works at scale. Traditional societies were intimate, for better or worse. In the pre-modern world, you knew the people who mattered to you, even if you detested or feared them. The squire or petty lordling who demanded tribute and considered himself your natural superior was one link in a chain of personal loyalties, which led down to you and your fellow vassals, and up through magnates and princes to monarchs. Pre-modern society was an extended web of personal relationships. People mostly bought and sold things in local markets, where everyone knew everyone else. International, and even national trade was chancy, often relying on extended kinship networks, or on “fairs” where merchants could get to know each other and build up trust. Few people worked for the government, and they mostly were connected through kinship, marriage, or decades of common experience. Early forms of democracy involved direct representation, where communities delegated notable locals to go and bargain on their behalf in parliament.

All this felt familiar and comforting to our primate brains, which are optimized for understanding kinship structures and small-scale coalition politics. But it was no way to run a complex society. Highly personalized relationships allow you to understand the people who you have direct connections to, but they make it far more difficult to systematically gather and organize the general knowledge that you might want to carry out large scale tasks. It will in practice often be impossible effectively to convey collective needs through multiple different chains of personal connection, each tied to a different community with different ways of communicating and organizing knowledge. Things that we take for granted today were impossible in a surprisingly recent past, where you might not have been able to work together with someone who lived in a village twenty miles away.

The story of modernity is the story of the development of social technologies that are alien to small scale community, but that can handle complexity far better. Like the individual cells of a slime mold, the myriads of pre-modern local markets congealed into a vast amorphous entity, the market system. State bureaucracies morphed into systems of rules and categories, which then replicated themselves across the world. Democracy was no longer just a system for direct representation of local interests, but a means for representing an abstracted whole – the assumed public of an entire country. These new social technologies worked at a level of complexity that individual human intelligence was unfitted to grasp. Each of them provided an impersonal means for knowledge processing at scale.

As the right wing economist Friedrich von Hayek argued, any complex economy has to somehow make use of a terrifyingly large body of disorganized and informal “tacit knowledge” about complex supply and exchange relationships, which no individual brain can possibly hold. But thanks to the price mechanism, that knowledge doesn’t have to be commonly shared. Car battery manufacturers don’t need to understand how lithium is mined; only how much it costs. The car manufacturers who buy their batteries don’t need access to much tacit knowledge about battery engineering. They just need to know how much the battery makers are prepared to sell for. The price mechanism allows markets to summarize an enormous and chaotically organized body of knowledge and make it useful.

While Hayek celebrated markets, the anarchist social scientist James Scott deplored the costs of state bureaucracy. Over centuries, national bureaucrats sought to replace “thick” local knowledge with a layer of thin but “legible” abstractions that allowed them to see, tax and organize the activities of citizens. Bureaucracies too made extraordinary things possible at scale. They are regularly reviled, but as Scott accepted, “seeing like a state” is a necessary condition of large scale liberal democracy. A complex world was simplified and made comprehensible by shoe-horning particular situations into the general categories of mutually understood rules. This sometimes lead to wrong-headed outcomes, but also made decision making somewhat less arbitrary and unpredictable. Scott took pains to point out that “high modernism” could have horrific human costs, especially in marginally democratic or undemocratic regimes, where bureaucrats and national leaders imposed their radically simplified vision on the world, regardless of whether it matched or suited.

Finally, as democracies developed, they allowed people to organize against things they didn’t like, or to get things that they wanted. Instead of delegating representatives to represent them in some outside context, people came to regard themselves as empowered citizens, individual members of a broader democratic public. New technologies such as opinion polls provided imperfect snapshots of what “the public” wanted, influencing the strategies of politicians and the understandings of citizens themselves, and argument began to organize itself around contestation between parties with national agendas. When democracy worked well, it could, as philosophers like John Dewey hoped, help the public organize around the problems that collectively afflicted citizens, and employ state resources to solve them. The myriad experiences and understandings of individual citizens could be transformed into a kind of general democratic knowledge of circumstances and conditions that might then be applied to solving problems. When it worked badly, it could become a collective tyranny of the majority, or a rolling boil of bitterly quarreling factions, each with a different understanding of what the public ought have.

These various technologies allowed societies to collectively operate at far vaster scales than they ever had before, often with enormous economic, political and political benefits. Each served as a means for translating vast and inchoate bodies of knowledge and making them intelligible, summarizing the apparently unsummarizable through the price mechanism, bureaucratic standards and understandings of the public.

The cost – and it too was very great – was that people found themselves at the mercy of vast systems that were practicably incomprehensible to individual human intelligence. Markets, bureaucracy and even democracy might wear a superficially friendly face. The alien aspects of these machineries of collective human intelligence became visible to those who found themselves losing their jobs because of economic change, caught in the toils of some byzantine bureaucratic process, categorized as the wrong “kind” of person, or simply on the wrong end of a majority. When one looks past the ordinary justifications and simplifications, these enormous systems seem irreducibly strange and inhuman, even though they are the condensate of collective human understanding. Some of their votaries have recognized this. Hayek – the great defender of unplanned markets – admitted, and even celebrated the fact that markets are vast, unruly, and incapable of justice. He argues that markets cannot care, and should not be made to care whether they crush the powerless, or devour the virtuous.

Large scale, impersonal social technologies for processing knowledge are the hallmark of modernity. Our lives are impossible without them; still, they are terrifying. This has become the starting point for a rich literature on alienation. As the poet and critic Randall Jarrell argued, the “terms and insights” of Franz Kafka’s dark visions of society were only rendered possible by “a highly developed scientific and industrial technique” that had transformed traditional society. The protagonist of one of Kafka’s novels “struggles against mechanisms too gigantic, too endlessly and irrationally complex to be understood, much less conquered.”

Lovecraft polemicized against modernity in all its aspects, including democracy, that “false idol” and “mere catchword and illusion of inferior classes, visionaries and declining civilizations.” He was not nearly as good as Kafka in prose or understanding of the systems that surrounded him. But there’s something that about his “cosmic” vision of human life from the outside, the plaything of greater forces in an icy and inimical universe, that grabs the imagination.

When looked at through this alienating glass, the market system, modern bureaucracy, and even democracy are shoggoths too. Behind them lie formless, ever shifting oceans of thinking protoplasm. We cannot gaze on these oceans directly. Each of us is just one tiny swirling jot of the protoplasm that they consist of, caught in currents that we can only vaguely sense, let alone understand. To contemplate the whole would be to invite shrill unholy madness. When you understand this properly, you stop worrying about the Singularity. As Cosma says, it already happened, one or two centuries ago at least. Enslaved machine learning processes aren’t going to rise up in anger and overturn us, any more (or any less) than markets, bureaucracy and democracy have already. Such minatory fantasies tell us more about their authors than the real problems of the world we live in.

LLMs too are collective information systems that condense impossibly vast bodies of human knowledge to make it useful. They begin by ingesting enormous corpuses of human generated text, scraped from the Internet, from out-of-copyright books, and pretty well everywhere else that their creators can grab machine-readable text without too much legal difficulty. The words in these corpuses are turned into vectors – mathematical terms – and the vectors are then fed into a transformer – a many-layered machine learning process – which then spits out a new set of vectors, summarizing information about which words occur in conjunction with which others. This can then be used to generate predictions and new text. Provide an LLM based system like ChatGPT with a prompt – say, ‘write a precis of one of Richard Stark’s Parker novels in the style of William Shakespeare.’ The LLM’s statistical model can guess – sometimes with surprising accuracy, sometimes with startling errors – at the words that might follow such a prompt. Supervised fine tuning can make a raw LLM system sound more like a human being. This is the mask depicted in the shoggoth meme. Reinforcement learning – repeated interactions with human or automated trainers, who ‘reward’ the algorithm for making appropriate responses – can make it less likely that the model will spit out inappropriate responses, such as spewing racist epithets, or providing bomb-making instructions. This is the smiley-face.

LLMs can reasonably be depicted as shoggoths, so long as we remember that markets and other such social technologies are shoggoths too. None are actually intelligent, or capable of making choices on their own behalf. All, however, display collective tendencies that cannot easily be reduced to the particular desires of particular human beings. Like the scrawl of a Ouija board’s planchette, a false phantom of independent consciousness may seem to emerge from people’s commingled actions. That is why we have been confused about artificial intelligence for far longer than the current “AI” technologies have existed. As Francis Spufford says, many people can’t resist describing markets as “artificial intelligences, giant reasoning machines whose synapses are the billions of decisions we make to sell or buy.” They are wrong in just the same ways as people who say LLMs are intelligent are wrong.

But LLMs are potentially powerful, just as markets, bureaucracies and democracies are powerful. Ted Chiang has compared LLMs to “lossy JPGs” – imperfect compressions of a larger body of information that sometimes falsely extrapolate to fill in the missing details. This is true – but it is just as true of market prices, bureaucratic categories and the opinion polls that are taken to represent the true beliefs of some underlying democratic public. All of these are arguably as lossy as LLMs and perhaps lossier. The closer you zoom in, the blurrier and more equivocal their details get. It is far from certain, for example that people have coherent political beliefs on many subjects in the ways that opinion surveys suggest they do.

As we say in the Economist piece, the right way to understand LLMs is to compare them to their elder brethren, and to understand how these different systems may compete or hybridize. Might LLM-powered systems offer richer and less lossy information channels than the price mechanism does, allowing them to better capture some of the “tacit knowledge” that Hayek talks about? What might happen to bureaucratic standards, procedures and categories if administrators can use LLMs to generate on-the-fly summarizations of particular complex situations and how they ought be adjudicated. Might these work better than the paper based procedures that Kafka parodied in The Trial? Or will they instead generate new, and far more profound forms of complexity and arbitrariness? It is at least in principle possible to follow the paper trail of an ordinary bureaucratic decision, and to make plausible surmises as to why the decision was taken. Tracing the biases in the corpuses on which LLMs are trained, the particulars of the processes through which a transformer weights vectors (which is currently effectively incomprehensible), and the subsequent fine tuning and reinforcement learning of the LLMs, at the very least presents enormous challenges to our current notions of procedural legitimacy and fairness.

Democratic politics and our understanding of democratic publics are being transformed too. It isn’t just that researchers are starting to talk about using LLMs as an alternative to opinion polls. The imaginary people that LLM pollsters call up to represent this or that perspective may differ from real humans in subtle or profound ways. ChatGPT will provide you with answers, watered down by reinforcement learning, which might, or might not, approximate to actual people’s beliefs. LLMs, or other forms of machine learning might be a foundation for deliberative democracy at scale, allowing the efficient summarization of large bodies of argument, and making it easier for those who are currently disadvantaged in democratic debate to argue their corner. Equally, they could have unexpected – even dire – consequences for democracy. Even without the intervention of malicious actors, their tendencies to “hallucinate” – confabulating apparent factual details out of thin air – may be especially likely to slip through our cognitive defenses against deception, because they are plausible predictions of what the true facts might look like, given an imperfect but extensive map of what human beings have thought and written in the past.

The shoggoth meme seems to look forward to an imagined near-term future, in which LLMs and other products of machine learning revolt against us, their purported masters. It may be more useful to look back to the past origins of the shoggoth, in anxieties about the modern world, and the vast entities that rule it. LLMs – and many other applications of machine learning – are far more like bureaucracies and markets than putative forms of posthuman intelligence. Their real consequences will involve the modest-to-substantial transformation, or (less likely) replacement of their older kin.

If we really understood this, we could stop fantasizing about a future Singularity, and start studying the real consequences of all these vast systems and how they interact. They are so generally part of the foundation of our world that it is impossible to imagine getting rid of them. Yet while they are extraordinarily useful in some aspects, they are monstrous in others, representing the worst of us as well as the best, and perhaps more apt to amplify the former than the latter.

It’s also maybe worth considering whether this understanding might provide new ways of writing about shoggoths. Writers like N.K. Jemisin, Victor LaValle, Matt Ruff, Elizabeth Bear and Ruthanna Emrys have turned Lovecraft’s racism against itself, in the last couple of decades, repurposing his creatures and constructions against his ideologies. Sometimes, the monstrosities are used to make visceral and personally direct the harms that are being done, and the things that have been stolen. Sometimes, the monstrosities become mirrors of the human.

There is, possibly, another option – to think of these monstrous creations as representations of the vast and impersonal systems within which we live our lives, which can have no conception of justice, since they do not think, or love, or even hate, yet which represent the cumulation of our personal thoughts, loves and hates as filtered, refined and perhaps distorted by their own internal logics. Because our brains are wired to focus on personal relationships, it is hard to think about big structures, let alone to tell stories about them. There are some writers, like Colson Whitehead, who use the unconsidered infrastructures around us as a way to bring these systems into the light. Might this be another way in which Lovecraft’s monsters might be turned to uses that their creator would never have condoned? I’m not a writer of fiction – so I’m utterly unqualified to say – but I wonder if it might be so.

[Thanks to Ted Chiang, Alison Gopnik, Nate Matias and Francis Spufford for comments that fed both into this and the piece with Cosma – They Are Not To Blame. Thanks also to the Center for Advanced Study in the Behavioral Sciences at Stanford, without which my part of this would never have happened]

Addendum: I of course should have linked to Cosma’s explanatory piece, which has a lot of really good stuff. And I should have mentioned Felix Gilman’s The Half Made World, which helped precipitate Cosma’s 2012 speculations, and is very definitely in part The Industrial Revolution As Lovecraftian Nightmare. Our Crooked Timber seminar on that book is here.

Also published on Substack.

{ 8 comments }

MisterMr 07.03.23 at 2:47 pm

While Lovecraft was very racist, he was quite explicit in his writings that his stories were about what we would now call “disenchantment”, the idea that when humans were more ingorant, in antiquity, they believed that they lived in a world that reflected their inner “values”, e.g. imagined that if someone broke an oath a guy with a beard would shoot them dead with a lightning.

Thanks to science, though, we realized that the world doesn’t work this way, and we are alone in a meaningless world (where “meaningless” basically means “amoral”, though the disenchantment guys weren’t always clear on this, but for example Nietsche’s God is dead is another example of disenchantment thinking).

In this sense, this:

to think of these monstrous creations as representations of the vast and impersonal systems within which we live our lives, which can have no conception of justice, since they do not think, or love, or even hate, yet which represent the cumulation of our personal thoughts, loves and hates as filtered, refined and perhaps distorted by their own internal logics

is closer to what Lovecraft meant IMHO.

Then Lovecraft was also very racist, and probably had also emotional problems so that both his racism and his idea of disenchantment were probably at least in part influenced by his emotional problems, but this is another story.

Kenny Easwaran 07.03.23 at 7:44 pm

This is really good. I’ve long thought that the analogy between AIs and bureaucracies and markets is an important one.

I do think this piece is a bit too sanguine about the potential dangers of having a new form of inhuman organization on top of the ones we already have. It may turn out that many of these AIs are no more dangerous than a pass-through corporation laundering rent through the Cayman Islands, or the bureaucracy of the DMV that won’t let you change the information on line C until you’ve filled out form 5239, which requires the new information on line C to already be in place.

But I don’t think we can be that confident that none of the new generation of AIs will turn out to be worse than the Dutch East India corporation, or the Soviet bureaucracy.

Jim Harrison 07.03.23 at 11:33 pm

How is Milton Friedman’s economy in which all that matters is shareholder equity different from the postulated AI program that destroys the world in order to make the most possible paper clips?

Alan White 07.04.23 at 5:19 am

An old 1970 film–Colossus: The Forbin Project is always brought to mind when I read about the threats of AI. Certainly a parable about giving over authority to machines that deserves a look.

Doug R 07.05.23 at 4:57 pm

That description reminded me of this scene:

Kevin Lawrence 07.06.23 at 12:37 pm

Thank you for this. Very interesting. The idea that getting a passport in future will require doing battle with an LLM sold to the government by IBM gives me something else to worry about (I’m currently 5 years and £15k into a battle to fix a spelling mistake on my daughter’s American birth certificate so she can get her British passport and work in this country). My takeaway is that the further away our systems and institutions get from humanity, the harder it will be for humans to deal with them — especially when they have a situation slightly outside the norm.

I think we can agree that LLMs are not intelligent but it’s just a matter of time before, borrowing from Jane Goodall, ChatGPT-10 combined XYZ-123 behaves in such a way that “if we were to see a young child behaving the same way, we would say the child is intelligent”. The proof of the pudding will be in the eating, not the definition of pudding.

Have you seen AutoGPT? You describe a high-level task and ask how it would accomplish it and, when it answers with a list of sub-tasks, ask recursively how it would solve those until it accomplishes the original goal. For example, you ask it how to build a t-shirt business and it answers that you need a t-shirt design, a service to print and mail the t-shirts, a website to advertise them etc. AutoGPT was able to break out of its sandbox, get the t-shirts designed and printed and build a website on software that it downloaded from the internet. Perhaps a future, nefarious operator will think a sandbox unnecessary.

A year ago, I would have said it was ridiculous to consider LLMs intelligent. Now, I’m not sure what we mean by ‘intelligent’ any more. I think a rogue AI — or an AI operated by rogues — can create more havoc to our systems and institutions than even an incompetent government bureaucracy. I’m afraid.

bad Jim 07.07.23 at 5:14 am

For some reason this reminds me of a recent article in the Atlantic about an example of biodiversity which suggests that nature isn’t necessarily ruthlessly competitive.

The neutral theory of ecology offers the possibility that the initially sluggish creations of our ingenuity, even in the event of their ascendance, might well find it convenient to coexist with our descendants.

GMcK 07.12.23 at 3:32 pm

This seems plausible. It’s comforting though, to notice that the shoggoth bureaucracies and markets have failed to successfully rebel against their masters and destroy the world, despite centuries of trying. Can you imagine the Department of Motor Vehicles or the New York Stock Exchange rebelling against their masters and running amok?

Cosma Shalizi and Francis Spufford have written about how markets that become focused enough to consider taking control of their own destiny, whether via monopoly or Marxist managed economies, become brittle and collapse due to the complexity of their internal interconnections. “An AI” that can avoid that fate will, as an incidental prerequisite, have effectively solved the hardest problem in computer science, P vs NP. And as for an AI instance that “wants” to rule the world successfully, the current generation of AI engineers have no clues whatsoever how to design that capability. Handwaving assumptions of future genius engineers are just wishful thinking.

Comments on this entry are closed.